OpenAI AgentKit Review

OpenAI AgentKit review: visual workflow builder, evaluation tools, and ChatKit integration for building AI agents. Deep dive into components.

On October 6, 2025, OpenAI introduced AgentKit, a set of tools for developing, deploying, and optimizing intelligent agents that simplifies the process of creating agentic systems.

The launch took place at the third annual DevDay in San Francisco, where CEO Sam Altman presented the platform as a solution to a key industry problem: Until now, creating AI agents has required juggling disparate tools — complex orchestration without versioning, custom connectors, manual evaluation pipelines, and weeks of frontend development before launch. AgentKit combines three key components, each of which addresses a specific need in the agent system creation process.

Below, we will look at the components of the platform, focusing on those that are already available not only to corporate clients.

Platform components

The three main components of the AgentKit platform are a workflow builder (Agent Builder), an integration management panel (Connector Registry), and a frontend toolkit (ChatKit).

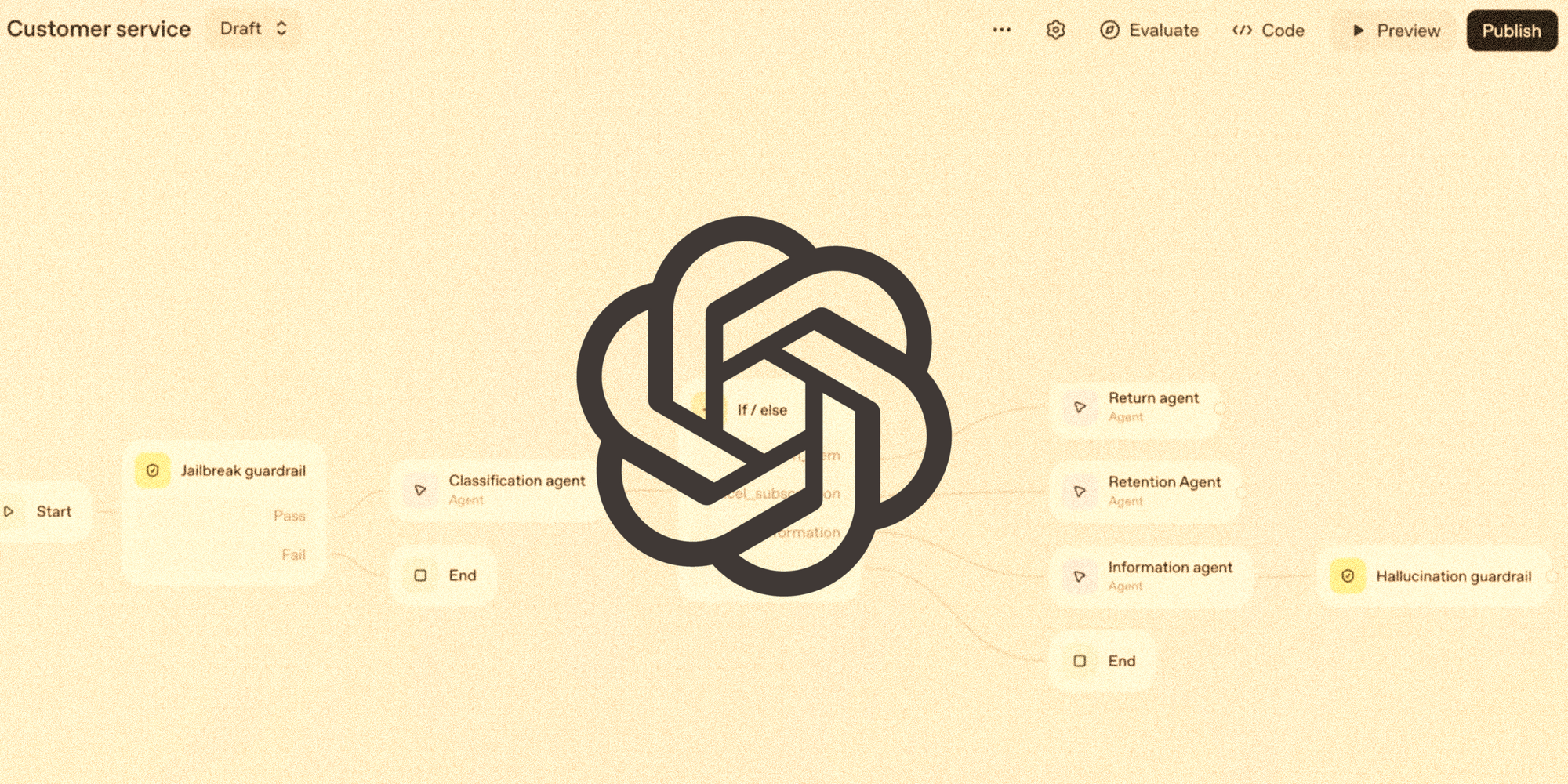

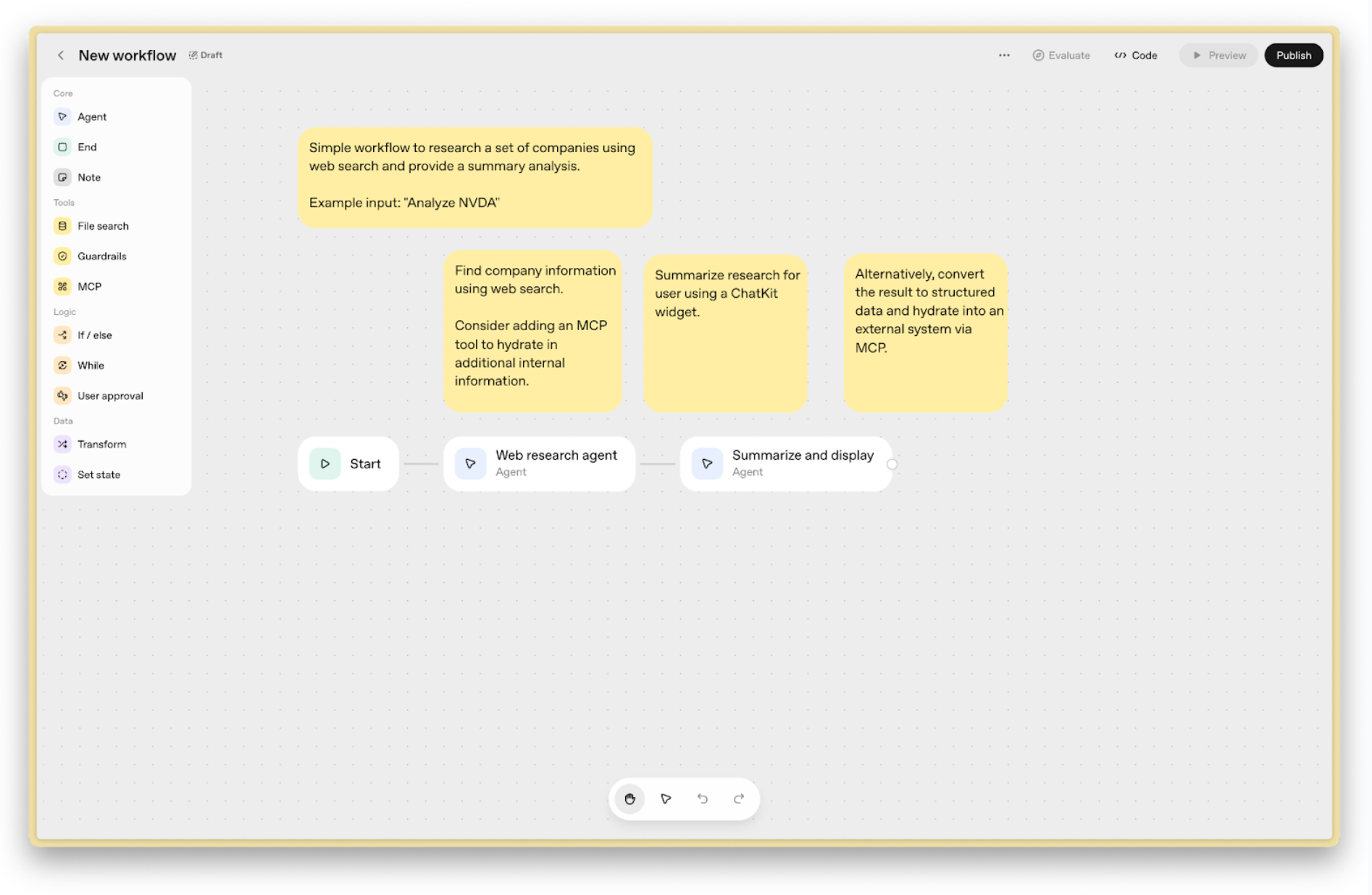

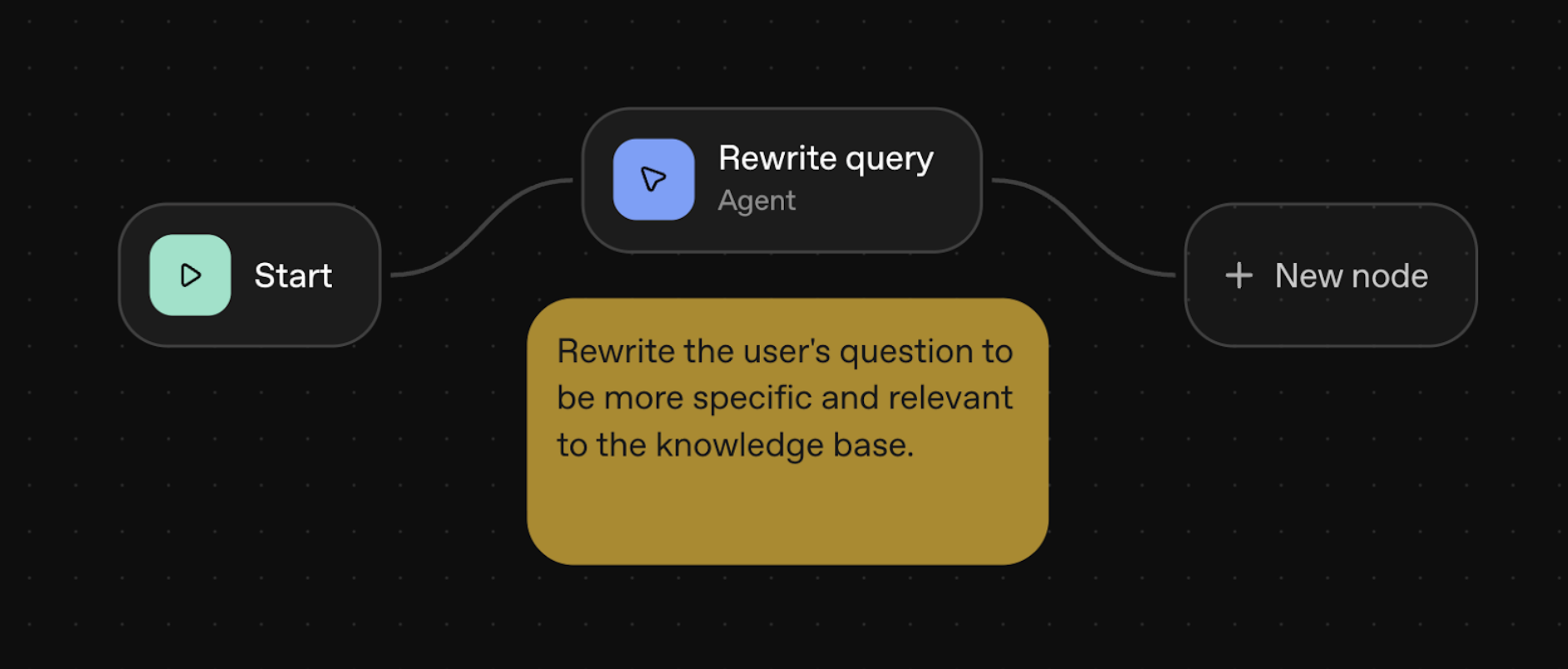

1. Agent Builder is a visual builder for creating multi-agent workflows with a drag-and-drop interface, versioning, and performance evaluation tools. In practice, it requires an understanding of programming logic (conditional statements, loops), knowledge of Common Expression Language (CEL) for setting conditions, and basic API skills for integration. It is more of a low-code platform that simplifies but does not eliminate the technical entry barrier.

2. Connector Registry — an integration control panel. This allows administrators to control the connection of tools to OpenAI products. Includes ready-made connectors for Dropbox, Google Drive, Microsoft Teams, and others. Unlike the other two components, this one is still only gradually being rolled out to enterprise clients.

3. ChatKit — tools for embedding custom agent chat interfaces into products. Saves time on frontend development. You can create a workflow for a customizable widget on your website and embed it as a ready-made component. If you want even more customization, you can export it as ready-made TypeScript code.

After building the flow, you can launch a preview chat and see how data propagates through the workflow. If everything works correctly, you can "publish" the result — upload the code via Agents SDK or use the Workflow ID link for ChatKit.

OpenAI promises to add a separate Workflows API and the ability to deploy agents directly in ChatGPT in the near future, which could significantly expand usage scenarios.

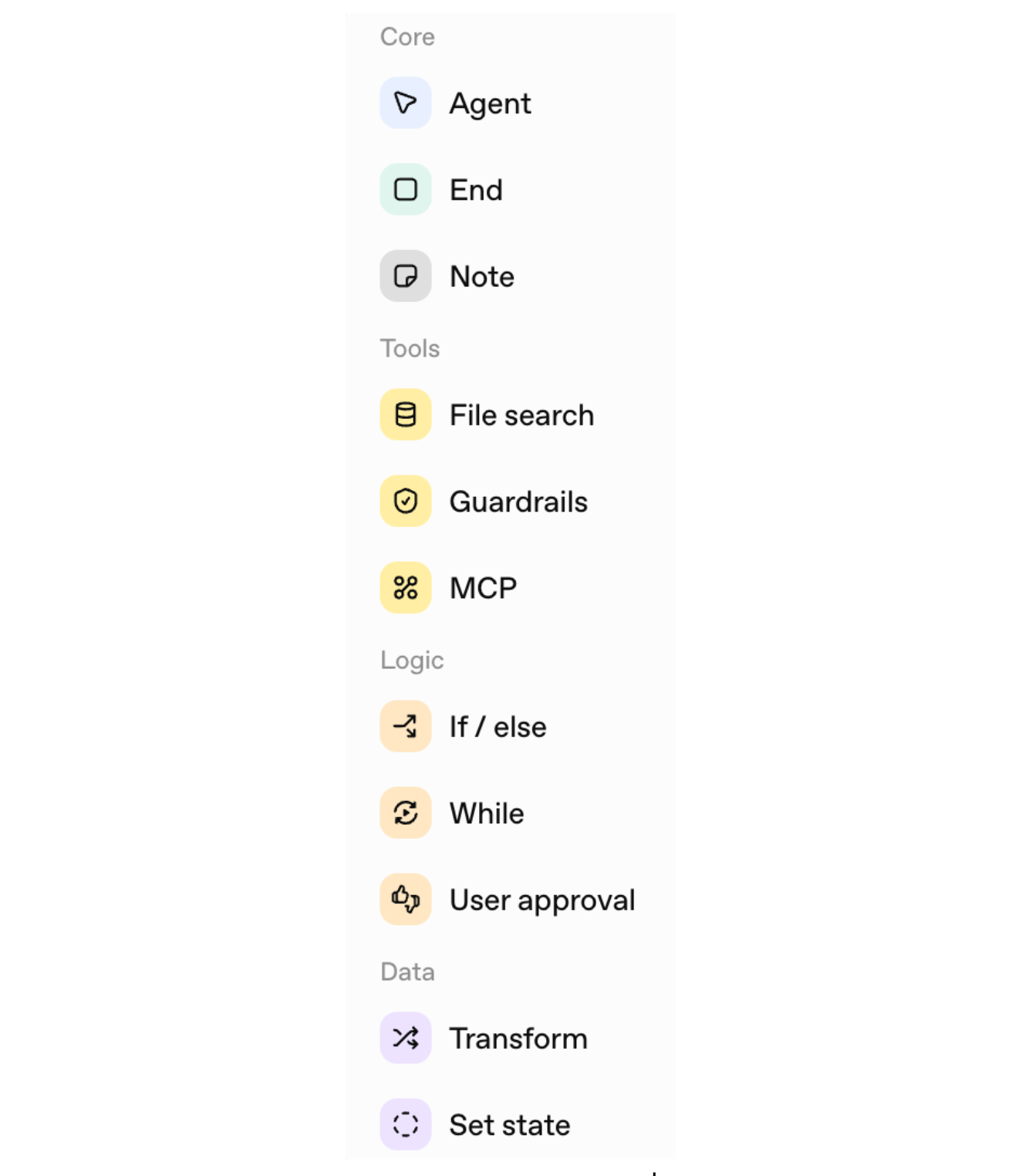

Workflow elements

Start. A mandatory node in every workflow. This is where input variables and state variables are set.

End. A node that can be used to prematurely stop the workflow when a certain condition is met. It also allows you to specify the output structure as a schema.

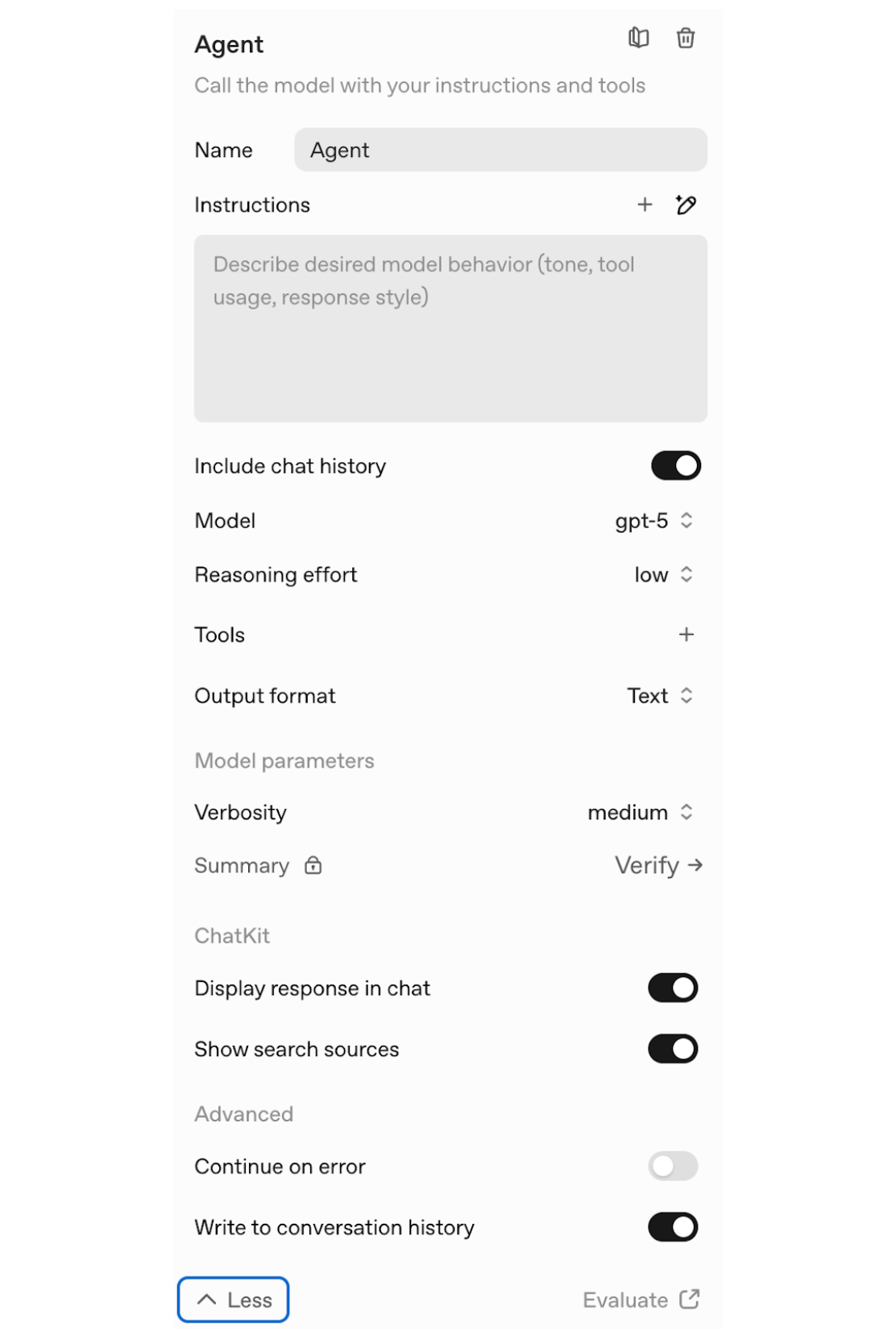

Agent. The following parameters can be specified for each agent node:

- agent name

- instructions

- context

- OpenAI model used

- reasoning effort (minimal, low, medium, high)

- tools available to the agent (Tools). Hosted tools can be specified as tools: MCP Server, File search, Web search, Code Interpreter, Image generation, or local tools: Function, Custom. The Client tool from ChatKit is also available.

- output format (Text, JSON, Widget). For the JSON format, you can describe the data schema using a special form. Based on this form, a structured output schema is created, similar to the JSON Schema format. Widget allows you to immediately adapt the data to a specific frontend component format, which is created using the Widget Studio tool.

Note. Comments and explanations in the workflow do not transform the data stream.

File search. Extracts data from vector stores created in OpenAI. To search in other stores, you can use the corresponding MCP.

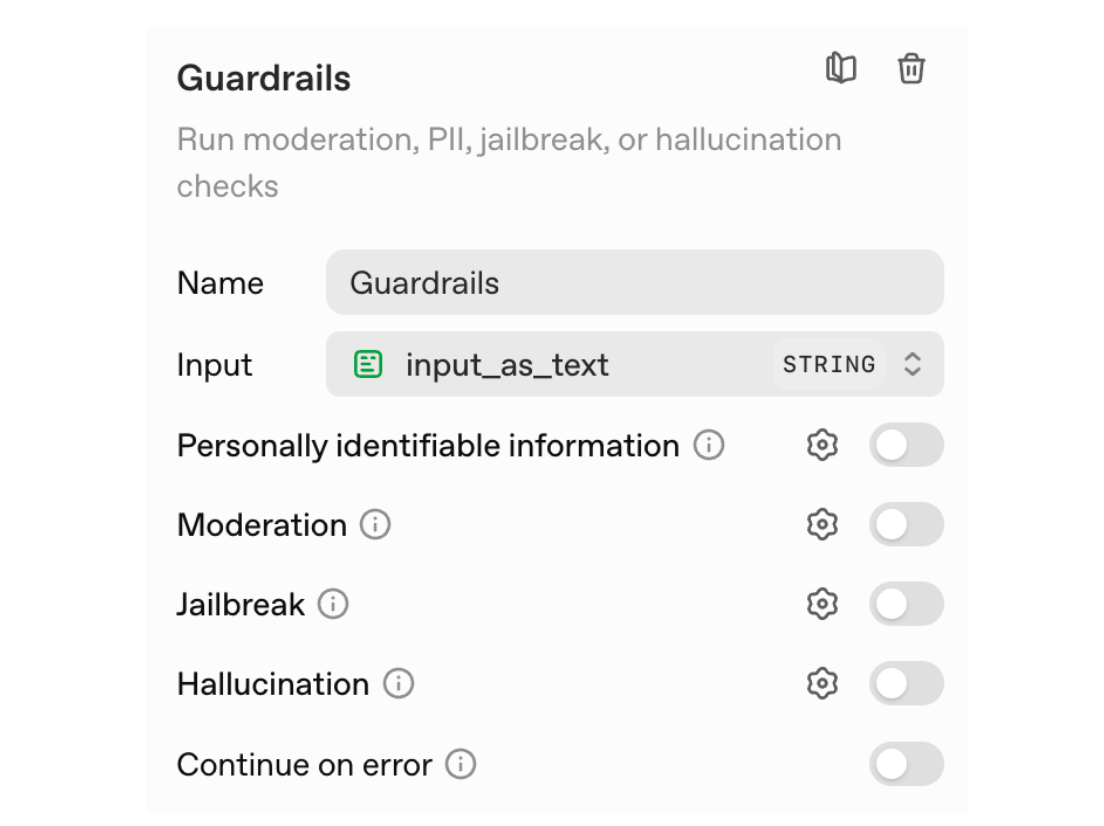

Guardrails. Blocks to protect against unwanted input: personal identification data, hallucinations, etc. By default, the node works on a "pass-or-fail" basis, i.e., it tests the output from the previous node, and we determine what happens next in the workflow. When Guardrails are triggered, it is recommended to either terminate the workflow or return to the previous step with a reminder about safe use.

MCP. Calls third-party tools and services. MCP connections are useful in a workflow that needs to read or search for data in another application, such as Gmail or Zapier. You can use a couple of dozen MCPs available on the platform or specify your own MCP server.

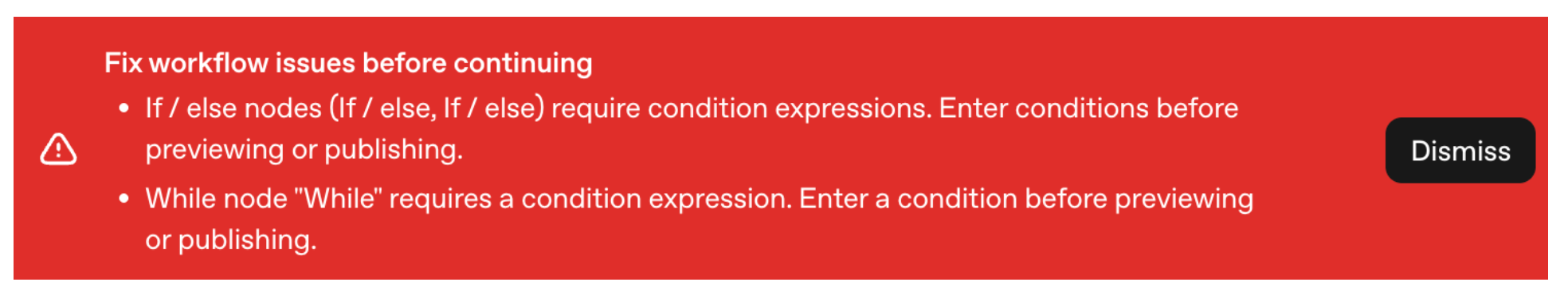

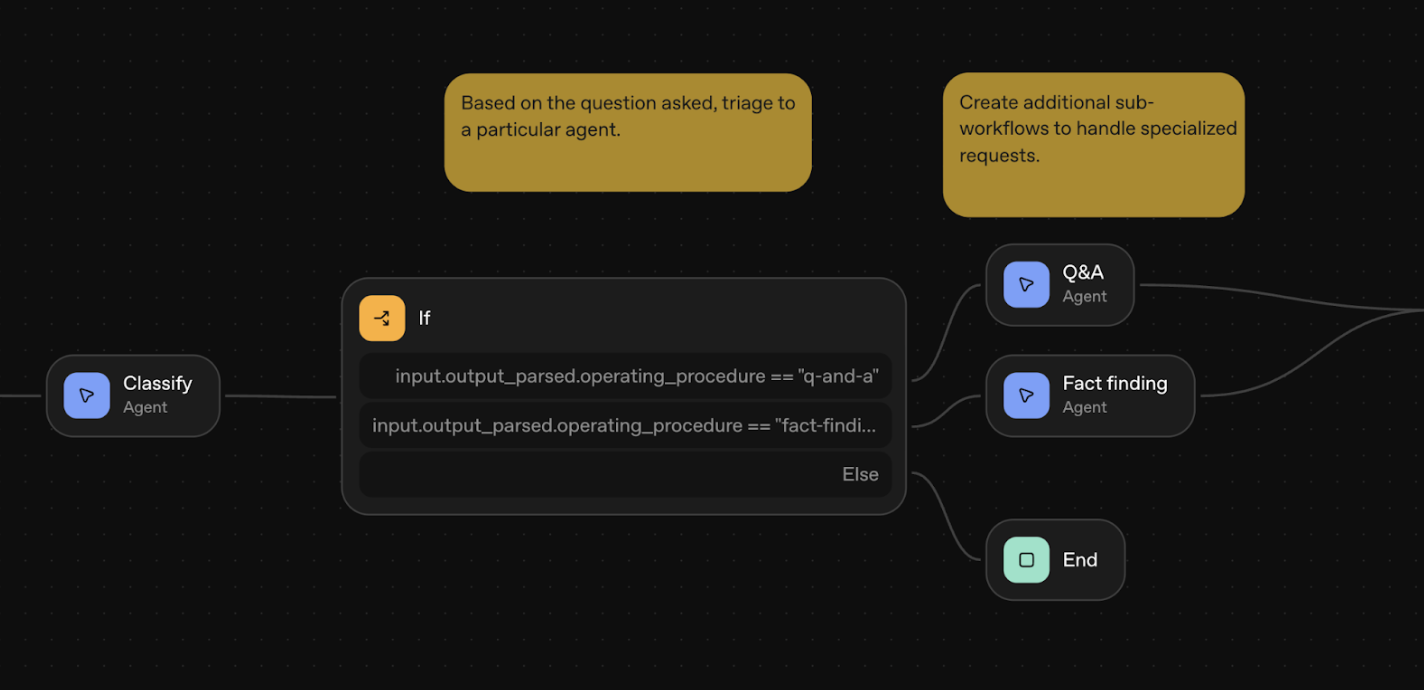

If/else logic node. This node uses Common Expression Language (CEL) to describe conditions. The node splits the data flow into two branches depending on whether the condition is met.

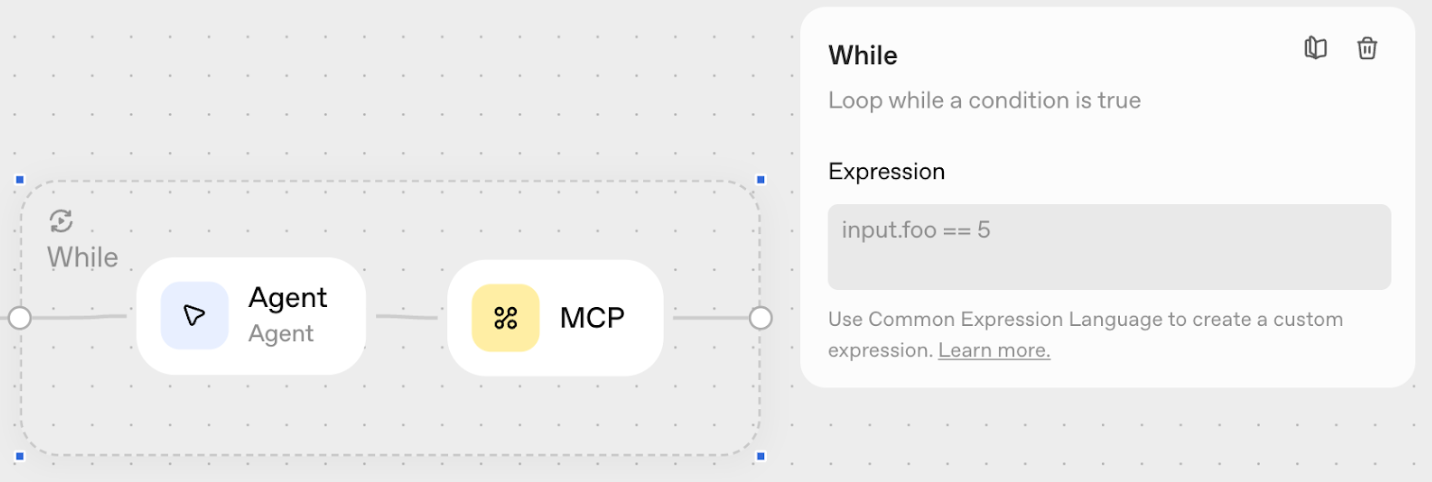

While logic node. A loop with a user-defined condition in the form of a container that limits a group of nodes also uses CEL.

Human approval. Passes the decision to end users for approval. Useful for processes where agents create intermediate states that may require user verification before being sent.

For example, an agent sends emails on your behalf. We add an agent node that outputs an email widget, then an approval node immediately after it. We configure the approval node to ask, "Would you like me to send this email?" and, if approved, the workflow proceeds to the MCP node, which connects to Gmail.

Transform. Converts output data (e.g., object → array). Useful for forcing types to conform to the desired schema or converting data to be readable and understandable by agents as input.

Set state. Defines global variables for use throughout the workflow. Useful when an agent accepts input and outputs something new that you want to use throughout the workflow. You can define this output as a new global variable.

Quality Evaluation

An important problem with working with large language models is the non-deterministic behavior of models, the difficulty of working with edge cases in a limited context. To evaluate the quality of agents' work, OpenAI offers several strategies within the Evals system.

The platform includes the Evals system with four functions: datasets, trace grading, automated prompt optimization, and third-party model support.

1. Datasets is the easiest and fastest way to create a dataset for evaluation. You can import data from csv. In fact, we are creating a dataset with reference matches between the model's input and output. Next, with the configured dataset and prompt, you can generate output data. This will give you an idea of how the model performs the task with the provided prompt and tools, and you can then annotate the data so that the model can improve its performance.

2. Trace grading — end-to-end evaluation of agent workflows. Graders are designed to evaluate data based on its type in a more algorithmic way: does the answer match the specified answer exactly, how close is the answer to the true value, etc. At the same time, you can write Python code in graders to set your own verification conditions.

3. Automated prompt optimization based on user annotation and evaluation results. As a result of annotation, you provide the model with information-rich context that allows it to automatically improve the prompt through the prompt optimizer. The procedure can then be repeated with the updated prompt until you are satisfied with the result. To better understand this process, check out the OpenAI Cookbook.

4. Third-party model support for testing results by connecting external models within a single evaluation platform. However, the list of providers is currently limited to the following: Google, Anthropic, Together, and Fireworks.

OpenAI has also introduced new reinforcement fine-tuning (RFT) capabilities for o4-mini (publicly available) and GPT-5 (closed beta) models, including:

- Training models to invoke the right tools at the right time

- Customizable evaluation criteria for specific use cases

Product integration via ChatKit

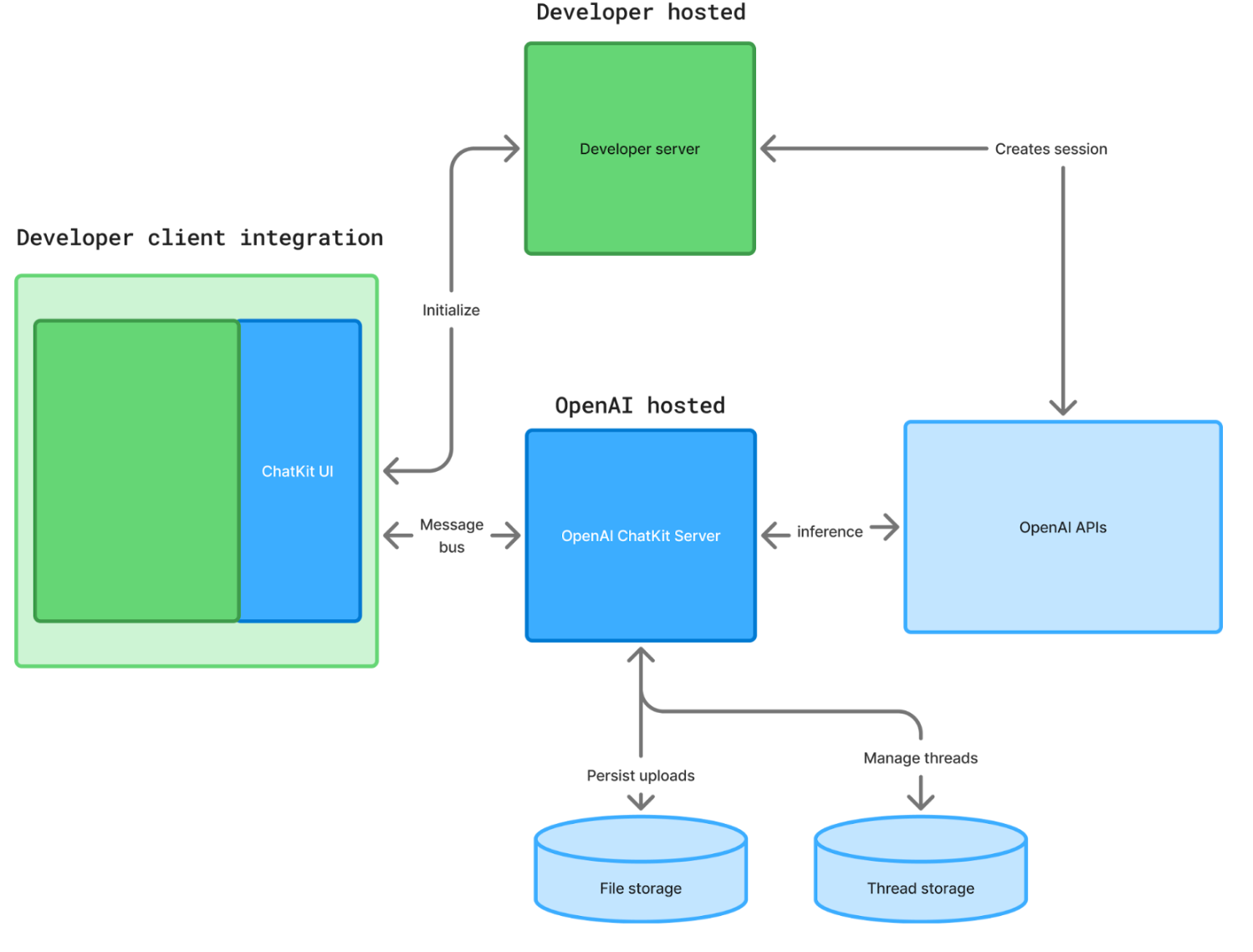

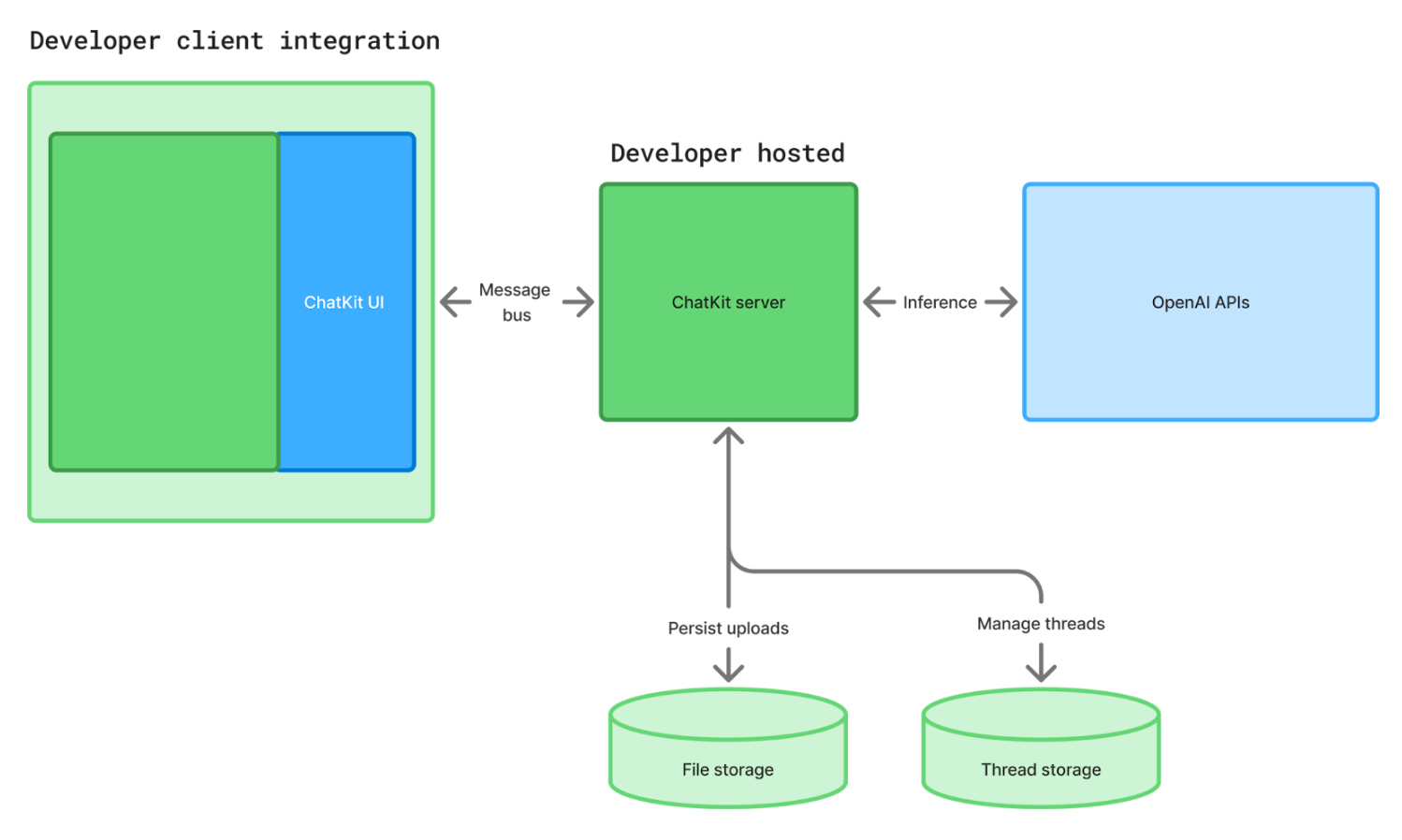

ChatKit is OpenAI's toolkit for creating agent-based chat interfaces. It provides ready-made UI widgets and easy workflow integration. ChatKit has SDKs for TypeScript and Python for working with the backend.

Setup process (details). First, you create a workflow in the visual Agent Builder. If you are integrating chat into your frontend via ChatKit, the workflow in Agent Builder effectively acts as your backend. Next, you need to link the workflow and create a mechanism for generating a client token for the ChatKit session, which is used in the corresponding React library. In the frontend code, you can embed ChatKit widgets that will automatically connect to the workflow.

Conclusion

OpenAI AgentKit represents a significant step in the evolution of tools for creating AI agents. The platform truly solves the real problem of fragmentation in existing solutions by offering an integrated approach from visual design to deployment.

Strengths:

- Lowering the barrier to entry for creating agent systems, thanks to a visual designer

- Unification of development, testing, and deployment processes within a single ecosystem

- Built-in quality assessment and optimization mechanisms, which are critical for production solutions

- Deployment flexibility: from a fully managed OpenAI solution to a self-hosted option

Current limitations:

- Positioned as a visual builder, but requires programming skills

- The product explicitly creates vendor lock-in on OpenAI models

- Limited support for third-party models in the evaluation system

- No ability to program the workflow itself via a prompt

Prospects. The developer community's reaction has been mixed. Skeptics compare AgentKit to existing LowCode platforms such as n8n and Zapier, pointing to similar functionality and visual approach, but overly superficial implementation. However, this comparison misses a key difference: AgentKit is designed specifically for orchestrating LLM agents with deep integration into the OpenAI ecosystem. It is not a universal automation tool, but a specialized platform for agent development. In addition, LowCode platforms themselves can be integrated into the workflow via MCP, and vice versa — workflow code can be imported for integration into the product using LowCode platforms.

The real test for AgentKit is not its technical capabilities, but its economics and ecosystem. OpenAI does not charge extra for the platform, including it in the standard API pricing. The company is betting on increasing the consumption of its models. With 800 million active ChatGPT users per week and 4 million developers, the company has an unprecedented opportunity to standardize the approach to creating AI agents. Therefore, at this stage, there is no need to complicate the mechanism; the platform can effectively use feedback and the results of its testing by developers.

The platform's success will depend on how well OpenAI can balance ease of use and flexibility of configuration, as well as the speed at which it adds new integrations and expands capabilities beyond its own models. For companies already using the OpenAI ecosystem, AgentKit may be a logical choice for accelerating the development of agent solutions.

Sources

- Updated OpenAI documentation

- OpenAI blog: Introducing AgentKit